Works, Projects, & Pieces (Past, Present, and Future).

Goldfish Variations – Variation I, 2023

Goldfish Variations (2023) was born out of my explorations of new methods for performing with and controlling Eurorack modular synthesizers and turning them into – what I hope – are cohesive, musically expressive, and interesting instruments for performance and composition. Commissioned by Dr. Donn Schaefer (bass trombone) of the University of Utah, I originally composed a piece for bass trombone and electronics (my new instrument for musical expression – Curve, and Eurorack modular synthesizer). I have since written different variations on that original piece, where each new variation utilizes a different external, traditional musical instrument. Incorporating said external/traditional instrument (the bass trombone in this case) into my synthesizer’s signal flow has always been of particular interest to me due to the myriad of ways I can process and fuse that signal with the synthesizer’s signal, effectively transforming the external instrument into another “module” or facet of the synthesizer itself in addition to being a traditional instrument in its own right. This fusion of external/traditional instrument with my electronics has provided me (us) with a myriad of musical and performative possibilities that we can then explore together in our compositions and performances.

Kaurios, 2018

This instrument consists of two pieces, one for each of my hands. Each piece incorporates an array of 5 momentary metal push-buttons with RGB LED lights, a joystick, a touch-fader (soft-potentiometer), and a 9-degrees-of-freedom motion sensor, with an added laser-based distance sensor in the right-hand piece. The pieces are both completely wireless, utilizing the Bluetooth Low Energy MIDI data communication protocol, allowing for seamless integration with any MIDI-compatible DAW or device. This piece explores and transforms the original sounds of my shruti box into a new sonic tapestry while simultaneously developing the newly emerging performance practice that accompanies the invention of a new instrument.

ArcMorph, 2018

The Arc (designed and built by Monome) consists of only two endless-encoders and an array of 64 LED lights (per encoder) that can be used to visualize the position of each dial. When paired with another customizable interface called the Sensel Morph, which gave me the necessary button-type control I needed to finish this piece, I was able to fully realize a comprehensive piece of music. The minimalism of the Arc lends a sense of elegance to my performative interface, and allows me to focus on the exploration of the sonic details and minutia of the original shruti box samples in extraordinary detail.

Impromptu No.1, for ShrutiBox+, 2018

Bellows-driven reed instruments such as bagpipes, accordions, harmoniums, and shruti boxes have been one of my favorite instrument families for as long as I can remember. The sound and timbre that is produced by the buzzing of the reeds as the air rushes past them is especially rich and complex, and lends itself perfectly to the sonic exploration of my shruti box, which is the main concept behind this piece. ShrutiBox+ is both an electronic enhancement and augmentation of the original instrument, imbuing me with several additional points of interaction with the shruti box. These new points of interaction allow me to fully explore the sound of the shruti box in the detail that it deserves, all while being able to perform with the instrument itself in real-time.

Multios, 2018

I wanted to challenge myself to both design a fully-fledged data-driven instrument and compose an entire piece utilizing only my mobile devices. All aspects of the creation of this piece were done using only my iPad, from the sound design and data-mapping to the composition and subsequent real-time performance. My iPhone completes my performance interface by affording me an additional means of control over the structure of the piece. Musically, Multios is an iOS-based journey through the sounds of my shruti box, relying heavily on a variety of sonically-transformational techniques to fully explore the original sounds of the instrument.

Étude No.1, for Curve, 2017

Étude No.1, for Curve is the first ever composition using this new interface. To study and explore the control, performative possibilities, and affordances that this new interface offered me, I needed to study the options that Curve provided. However, I did not simply want to compose a study using the interface, but a substantial musical piece in its own right. To that end, the piece is broken up into four different sections, each highlighting a specific and unique performative technique that I developed for the instrument. Each section is denoted by a different method of physical interaction with the instrument, as well as a unique lighting mode designed to correspond and emphasize each performative technique.

T(Re)es, 2017

This piece is a remixed, reimagined piece that derives from an original composition I wrote years ago for my band, Hamilton Beach. I broke down each individual part from the original piece into very small, simple audio samples and loops that I used as the musical palette for the remix. This technique allowed me to maintain a strong connection to the original piece while still being able to slowly craft and shape an entirely new sonic journey throughout the performance. By utilizing only the Monome as the performance interface for this piece, I was able to stay true to the soul of the original music.

Trio 1-465, 2016

I had wanted for years to write a piece involving turntables and other instruments, and Trio 1-465 is the culmination of that idea. The addition of cello and my own custom-built interface utilizing Contour Design’s RollerMouse Red Plus, Max/MSP, and Ableton Live ended up yielding fantastic performative and aural results. Formed around the notion of a jazz trio, I wanted each instrument’s voice to have an equal say in the final soundscape of the piece while staying true to that which makes each instrument unique in its own right. The idea of using the turntables to sample, in real time, the sounds of the cello and then manipulate those same sounds, also in real time, was exceptionally intriguing to me. We were able to realize that idea by employing the technology available to us through the use of Ms. Pinky’s custom vinyl and software. By transforming the turntables into data generators using the Ms. Pinky vinyl, I was able to capture the sounds of the cello in Max/MSP and then map the corresponding data streams from each turntable to each new sound so as to maintain the same affordances that a traditional turntable and vinyl record would provide. The musical form is divided into seven individual sections. Each section takes on the form of one of the seven main church modes from the era of Gregorian Chant: Ionian, Dorian, Phrygian, Lydian, Mixolydian, Aeolian, and Locrian. Each section (or mode) of the piece is improvised, achieving a musical structure that is globally determined but locally improvised in real time and with each new performance. The result is an ensemble and musical journey that pays homage to traditional ideas of ensemble and musical form, while simultaneously creating something that has never been seen or heard before. An immeasurable amount of thanks goes to my fellow collaborators and performers, Zachary Boyt (cello) and Connor Sullivan (turntables).

LogDrum +, 2016

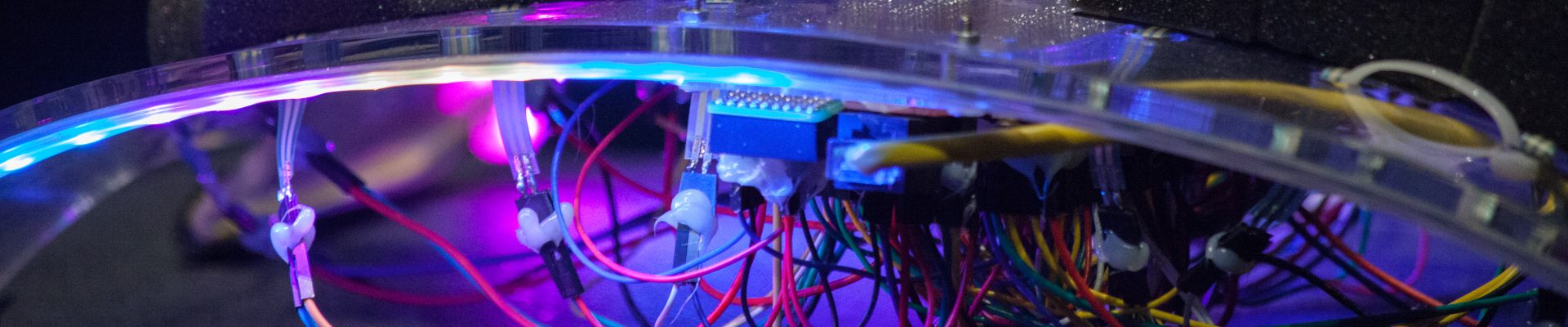

Augmenting and expanding upon an already established acoustic instrument had interested me for some time. I have always loved the warm and natural sound of wooden instruments, so the log drum ended up being a perfect candidate for this project. I custom built the encasement for the log drum out of laser-cut acrylic. I then attached four sensors (two force-sensitive resistors and two touch-potentiometers), a keypad (to control a looper), and two high-quality condenser contact microphones to the encasement. The sensors are all run through an Arduino Mega, which is then passing each sensor’s data stream to the computer in real time. By also having access to the natural sound of the log drum through the use of the contact microphones, I am able to have complete control over the sound that is made from the drum in real time by both processing the direct signal from the microphones and mapping the data streams to various parameters of that sound. I wanted to stay completely true to the original, natural sound of the log drum as the origin for all the sounds that are heard, and I wanted all of the sound processing and data mapping to be done in real time. All of my data mapping was done in Max/MSP, and my sound generation was done in Ableton Live.

SeeThree-DeeToo, in F# Major Mk.I, 2015

SeeThree-DeeToo, in F# Major is an exploration of sound. A musical tale is woven together through the transformation of non-musical sounds into what then become the musical schema of the piece. The entirety of the musical material is generated from the original non-musical sounds. This technique forges a cohesiveness to the overall aural tapestry of the piece, allowing for an organic, fluid exploration of the music and the sonic landscape which it creates. It is this composers sincerest hope that the story contained within the music of this piece lend itself to the kinship that it shares with another story…a story that was told a long time ago…in a galaxy far, far away…

Crayonada’s Hat, 2014

My instrument of choice for this piece was the eMotion Technologies’ Twist sensor suite (and, of course, my hat). While the Twist offered a myriad of different data streams that I could use as CC messages, I was also able to remap and reshape those same data streams into triggers, which allowed me to achieve a more interesting performance and musical result. I had several different data streams mapped to effects processing parameters, panning, and volume. I then triggered a specific sequence of events that controlled which track(s) were being heard. Whichever track was triggered also switched the panning controls to that specific track, to make it more apparent which track I had just turned on. Following the sequenced triggering, I then randomly triggered the state of each track to being either on, off, or partly on. The audio samples I utilized were actually the individual tracks from a previous composition of mine, called Crayonada (hence the title). However, to add an initial extra bit of aural flavor, I applied a series of individual effects (which involved the convolving, filtering, and transforming of each sample) to each track to morph them into something that, while still relatively similar to the original composition, were also very different.

The Bridge (Master’s Terminal Project Recital), 2013

“The Bridge” is the musical culmination of my M.M. degree in Intermedia Music Technology at the University of Oregon. It is a three-movement suite comprised of original music that I have composed over the last several months. The Bridge to which the title refers is the musical bridge that I have tried to create between the styles of popular electronic music and academic electronic music. There can sometimes be a vast disparity between these two musical styles, and myself being an advocate for both, I wanted to show, in musical terms, that the two styles do not have to be mutually exclusive.

Crayonada’s Hat (Live @ SEAMUS 2015)

My performance and discussion of Crayonada’s Hat, at the SEAMUS 2015 conference at Virginia Tech in Blacksburg, Virginia on March 27th, 2015.

Untitled Final Project for SensorMusik, 2014

My final project for SensorMusik, fall, 2014.

The Beat Mk.II (Live @ Future Music Oregon, 2012)

An augmented performance of The Beat (including live dance with a Microsoft Kinect), Live @ Future Music Oregon Recital, 03.10.2012.

Hamilton Beach Set (Live @ The Hult Center, Four Corners Concert, 2013)

My band, Hamilton Beach, performing at The Hult Center for the Performing Arts in Eugene, OR on June 1st, 2013 as part of the Four Corners concert put on by Harmonic Laboratories.